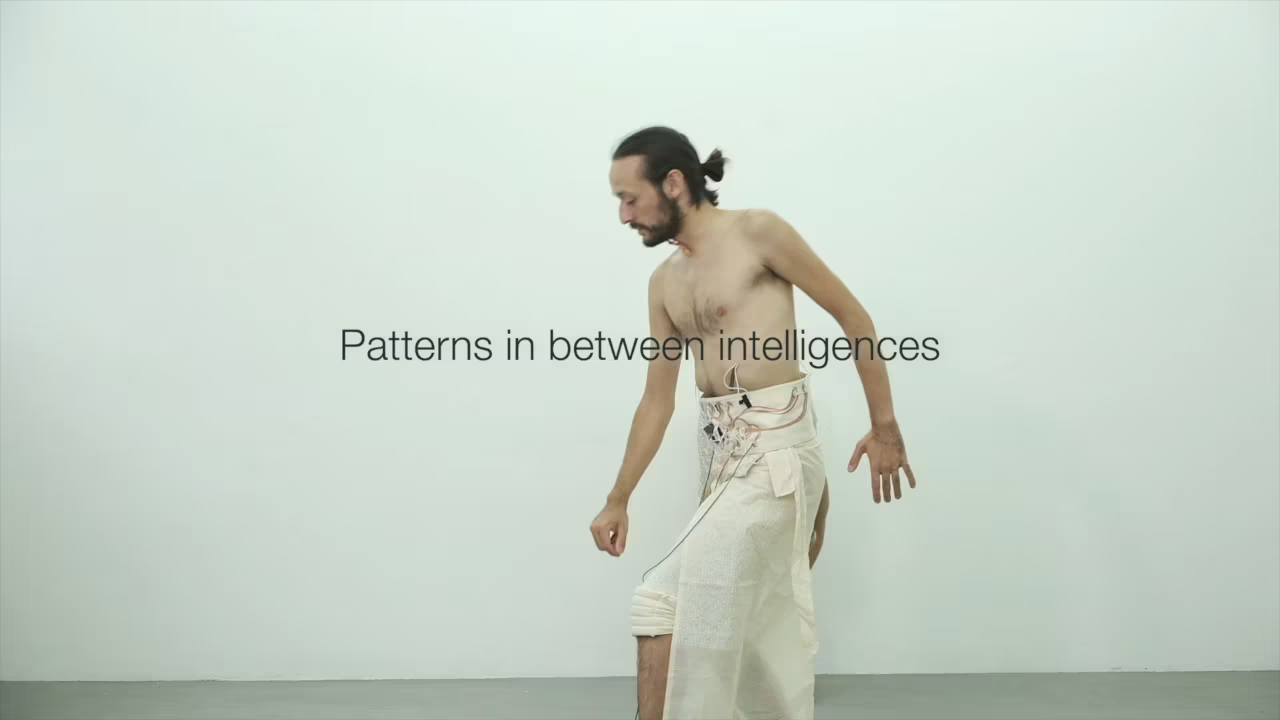

It’s been a privilege to be collaborating as part of the Patterns In Between Intelligences project with fellow live coder Lizzie Wilson, e-textile designer Mika Satomi, and choreographers/performers Deva Schubert and Juan Felipe Amaya Gonzalez. The following video shows an early prototype and describes the motivation behind the work.

The funding source via the LINK Masters programme offered the conceptual frame of the still-trending topic of AI. Collectively, we didn’t want to produce a piece as a critique of AI (although Mika’s prior work has explored this well) or indeed do an ‘art-washing’ project promoting AI, but rather apply AI to think and feel patterns between us. As a kind of technology for recognising or generating patterns, AI allows us to throw light on human aspects of collaboration, and the patterns and resonances that happen between people forming a kind of collective intelligence. To do this we used a range of different AI tools in the work, including the RAVE model for ‘neural audio synthesis’, and machine learning in the live processing of sensor data from Mika’s e-textile costumes and fabric. We also considered the piece itself as forming connections between people that generated a kind of collective intelligence.

You can read more about the first stages of our project in our paper “MosAIck: Staging Contemporary AI Performance – Connecting Live Coding, E-Textiles and Movement“, and/or watch the recording of the associated presentation delivered by Lizzie at the International Conference on Live Coding.

One aspect of my part in the collaboration was working with patterns created by moving humans. Mika, Lizzie and I worked together to capture live movements as continuous gestures, looking for repetitions in the streams of data coming from the sensors in the costumes and other fabrics in the piece, recognising patterns within those repetitions and then extracting, visualising and sonifying them, feeding them back into the performance via our live-edited code. We already took this further than the performance described in the paper, and plan to take it further still in future phases of our collaboration.

From the perspective of a musician working with code, it was especially nice to have the timing of the piece driven by physical movements, rather than the monotonous clock of a computer. In collaborations I find that instrumentalists have to follow whatever tempo I decide on, but when working e.g. with a percussionist, it should be possible to just stick an accelerometer on their forearm and have their movements captured, in order to drive my tempo. This can be implemented straightforwardly via autocorrelation, and is a technique I already took to another collaborative performance with Kate Sicchio, that I will write more about in a future blog post.

Wilson, Elizabeth, Schubert, Deva, Satomi, Mika, McLean, Alex, & Amaya Gozalez, Juan Felipe. (2023, April 18). MosAIck: Staging Contemporary AI Performance – Connecting Live Coding, E-Textiles and Movement. 7th International Conference on Live Coding (ICLC2023), Utrecht, Netherlands. https://doi.org/10.5281/zenodo.7843540